About

I'm a senior lecturer (=tenure-track assistant professor) at the department of statistics and operations research, school of mathematical sciences, Tel Aviv university. Prior to that, I was a postdoctoral research associate at Princeton university's program in applied and computational mathematics, working in Amit Singer's group. I did my Ph.D. at the department of computer science and applied mathematics at the Weizmann institute of science, where Boaz Nadler was my doctoral advisor.

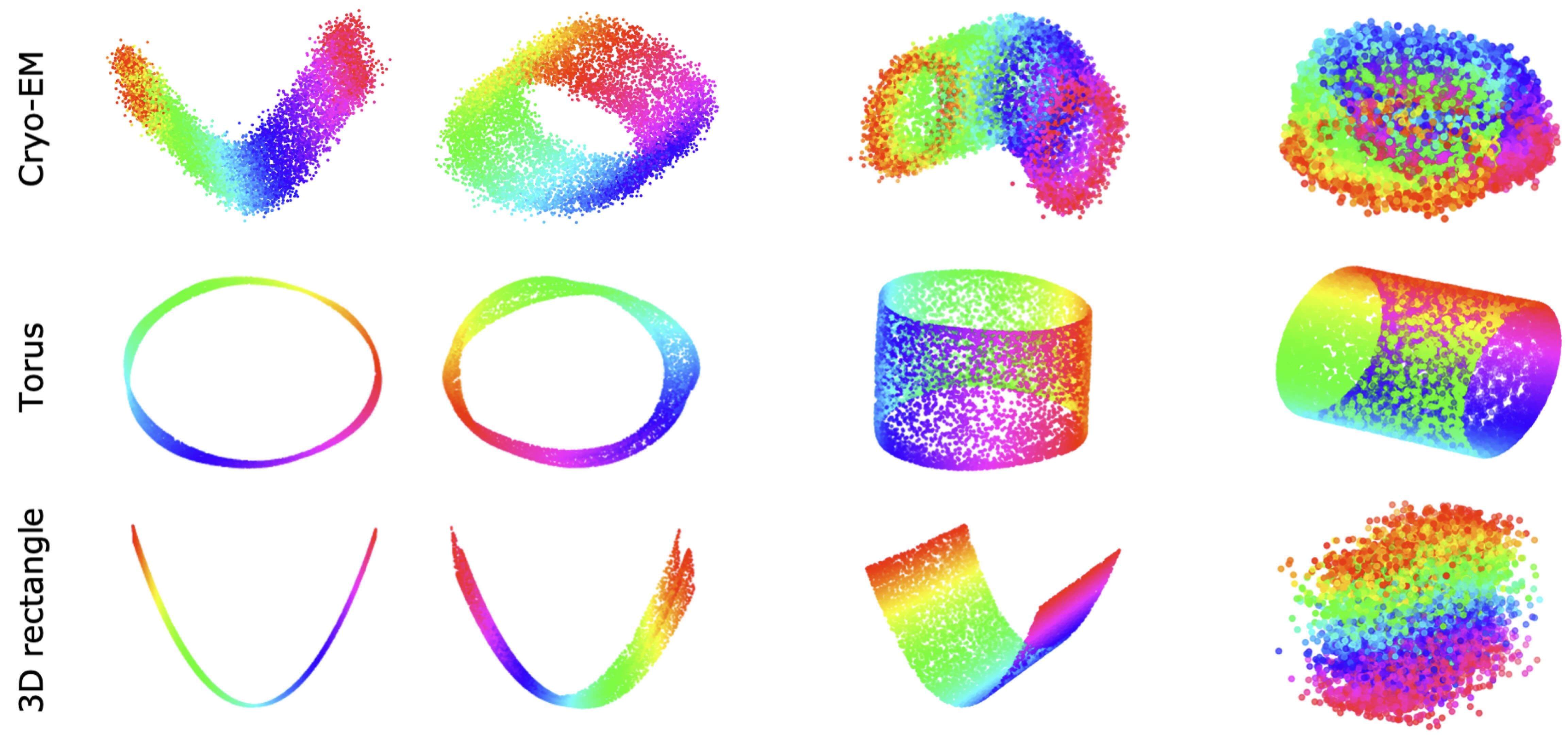

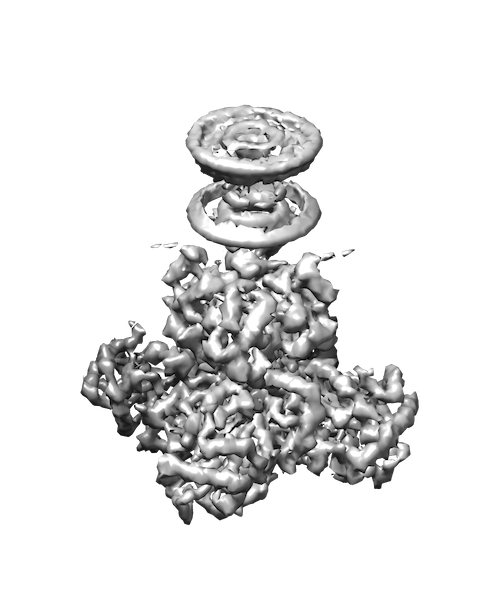

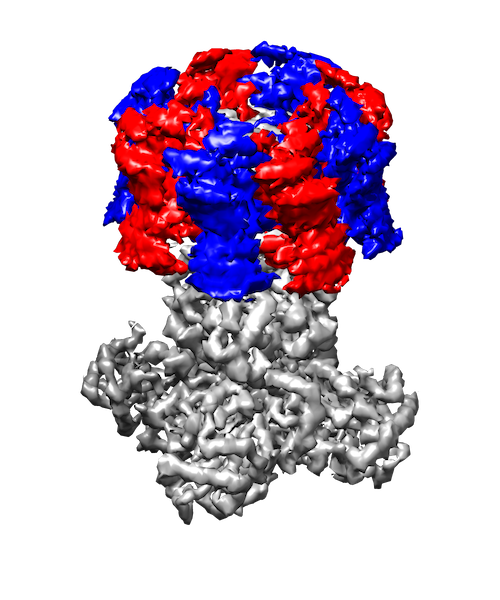

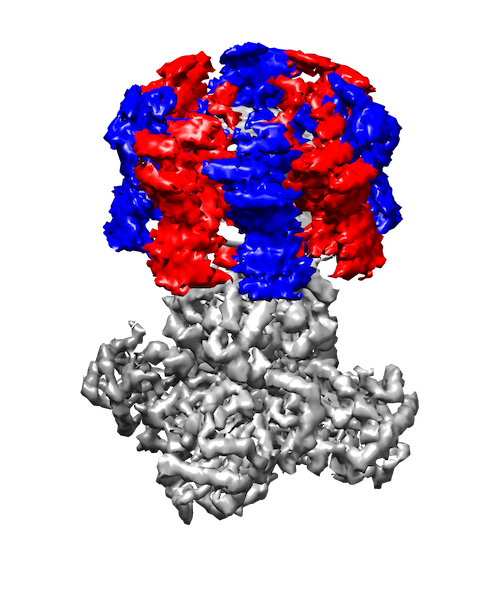

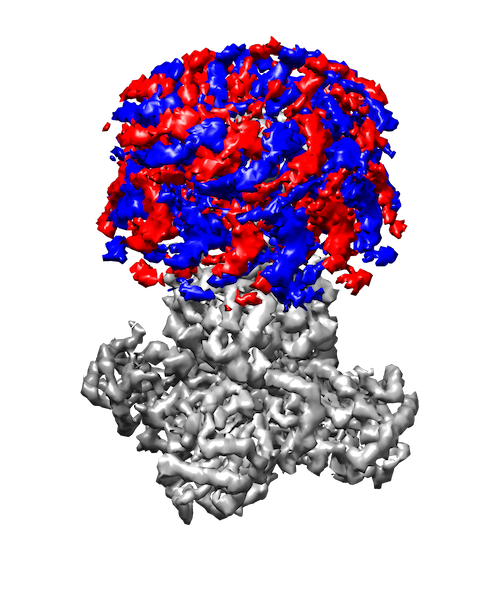

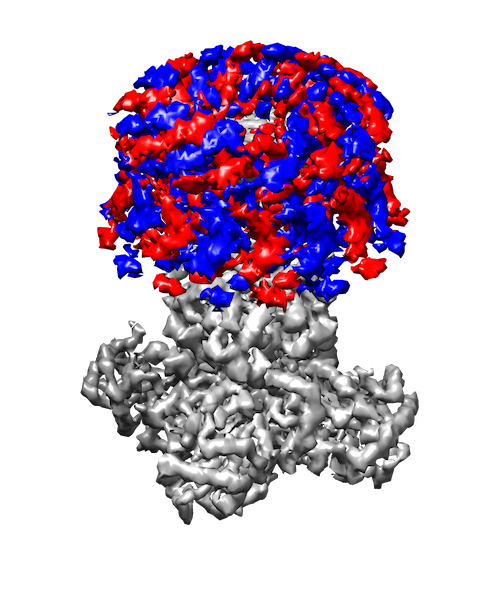

My research interests are broadly in the development of methodology for statistics and machine learning. More specifically, my current focus is developing tools for mapping and analyzing large volumetric data sets. This is motivated by a key challenge in structural biology: the 3D reconstruction and analysis of flexible proteins and other macromolecules from cryo-electron microscopy data sets.

Interested in doing research with me? I sometimes have openings at the MSc/PhD/Postdoc level for people with a strong mathematical foundation. Please email me and we'll setup a meeting.

Video Presentations:

• One-World Cryo-EM talk:

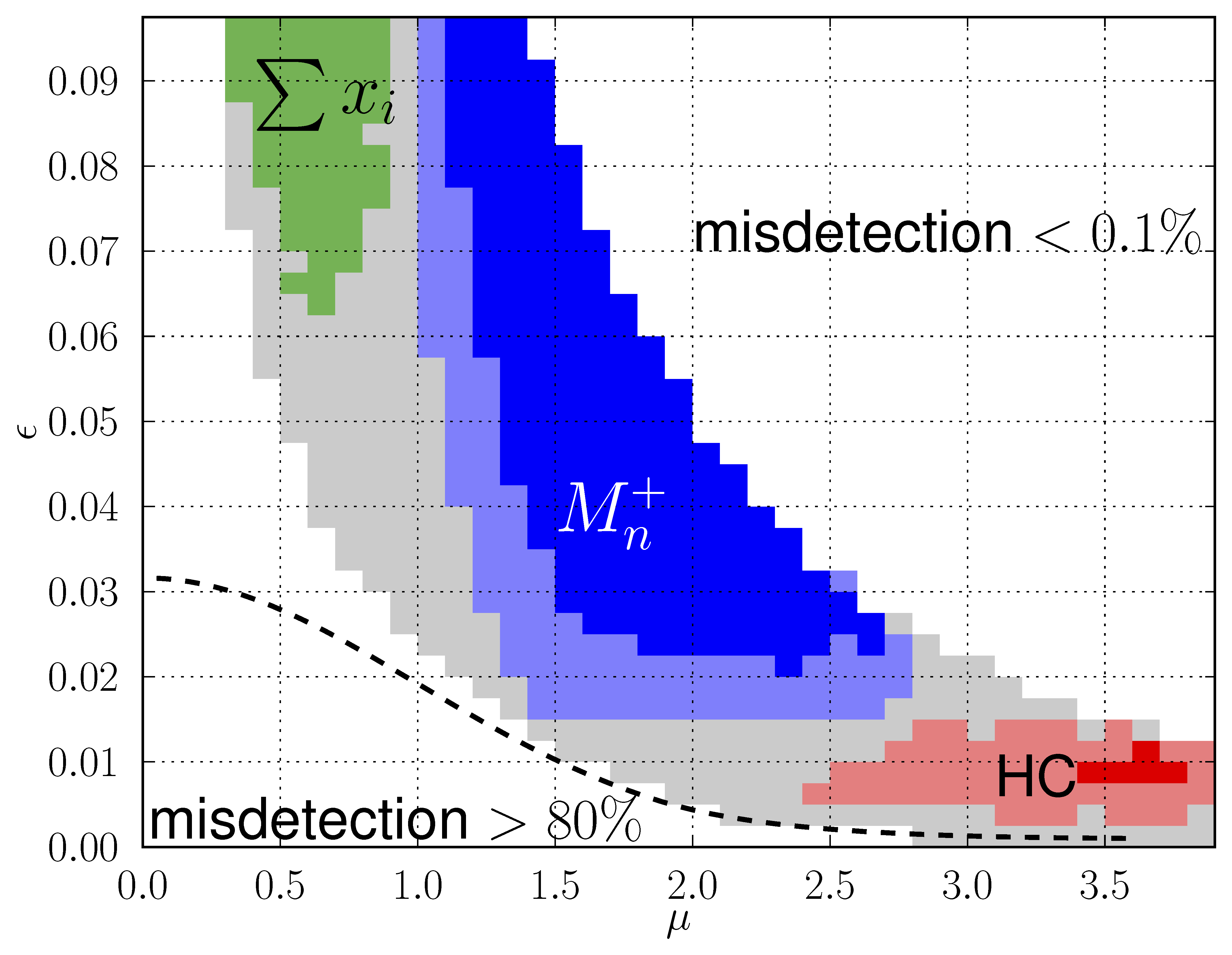

Tools for heterogeneity in cryo-EM: manifold learning, disentanglement and optimal transport

• Broad overview of my research (as of 3/2021) at INRIA's DataShape seminar:

Nonparametric estimation of high-dimensional shape spaces with applications to structural biology

• Presentation for 3rd year math students at Tel Aviv university (hebrew):

Shape spaces, dimensionality reduction and product manifold factorization

Funding:

• Israel Science Foundation (ISF)

• United States-Israel Binational Science Foundation (BSF)

• United States National Science Foundation (NSF)

Address: Schreiber 202, School of Mathematical Sciences, Tel Aviv University.